Go Serverless and save the planet

With Serverless we could scale down to zero, and underlining hardware could be shared between many modules of our applications. Using hardware only when is needed for where is needed the most(with auto scaling) is a key to increase performance while reducing costs. So as result with less servers we do contribute to cooling down the planet. It’s amazing to see that many young people are trying to save the planet from global warming. It seems a power transition is happening(e.g. in Europe) and we could expect a better decisions in continue 🤞. Technologies also could help us in this path and let’s hope we can go forward and become multiplanetary species even. What this post could do today is, to encourage people in software development to consider solutions which are more friendly to planet earth and help each other to adopt those solutions. Having said that some people think Trees are a faster solution to climate change, which frankly is simple and practical for getting into an action ✌.

Cloud Native Computing Foundation (CNCF)

landscape.cncf.io/serverless is a great place to see what is happening with Serverless world and to compare projects based on stars, commit frequencies, etc. It worth your time to evaluate available options and comparing them before adopting them. And/or find a domain expert in the Serverless world and follow their advises.

Knative platform

In this post we are going to install Knative platform and config it for production use with minimum required hardware. Why Knative? for me it was this post at first and after reading Continuous Delivery for Kubernetes book, I was sure Kubernetes and Knative need to be in my Serverless adventure’s backpack. Plus I do live in a 3rd world country, which means no AWS, no easy access to ready to use public cloud providers. But renting a server(with below spec) could be as cheap as $8 a month. So some people are forced to go with self-host option and this post may help them.

Complete guide to self-host the Knative platform

Requirements

-

A server with a public IP(v4) address (e.g. 13.49.80.99)

- 8 core CPU (6 core could work also)

- 16G ram (6G could work also)

- 30G disk (make sure you could add more storage if needed)

- A domain name (e.g. example.com)

First make sure your server doesn’t not have any limitation to access internet and docker registries.

Tested environment

- Ubuntu 20.04 LTS

- Microk8s 1.27/stable

- Istio 1.17.2

- Knative 1.10.1

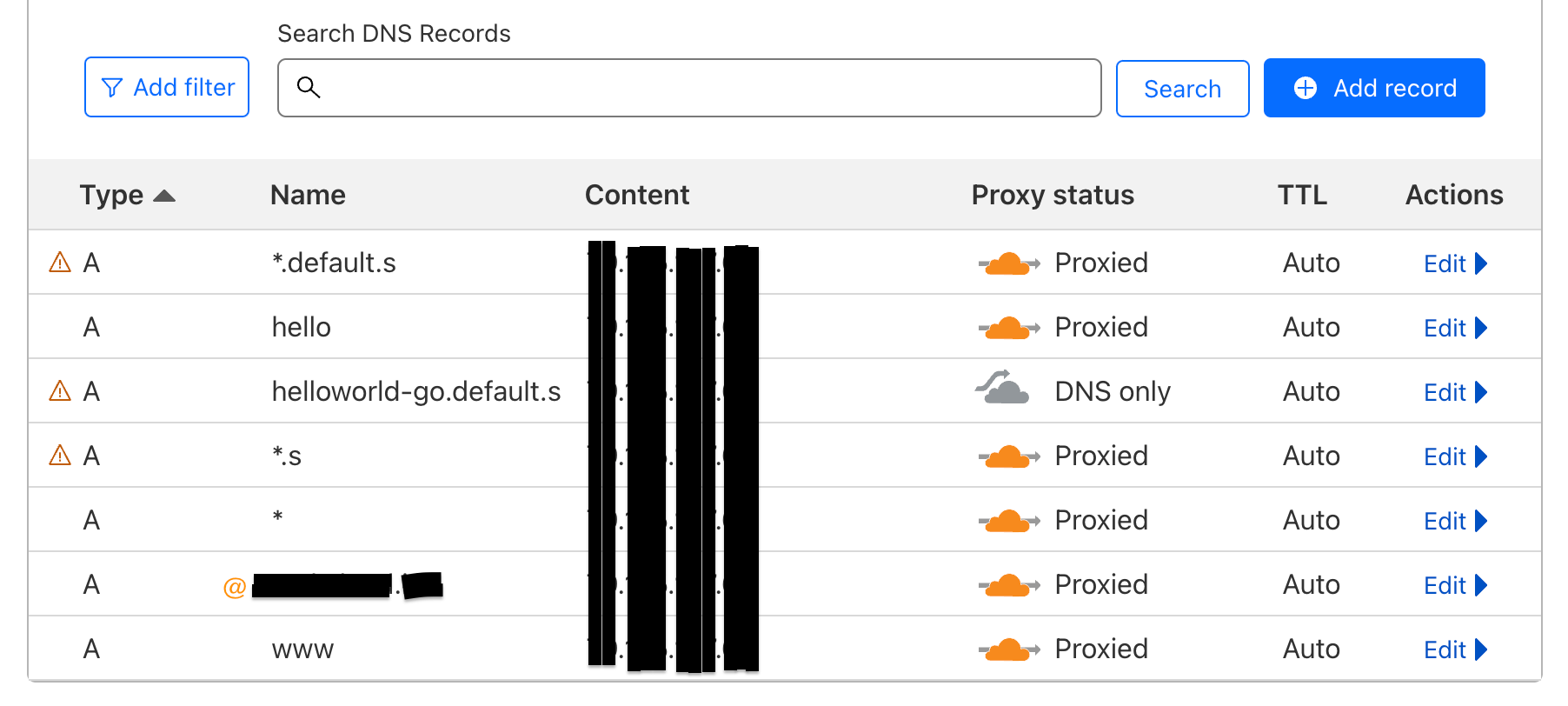

Required DNS Records

Following screenshot shows required type A DNS Records.

Install Microk8s

Microk8s is known as a lightweight Kubernetes which is optimized to use minium possible resources and still offers what a normal Kubernetes could do. so it somehow matches with our overall goal to go Serverless with less servers.

sudo snap install microk8s --classic --channel=1.27/stableAt the moment version 1.27 is the latest stable version of Microk8s, you could check here to choose the right channel. And please note, different version, might have it’s own differences in continue, and obviously you might face an issue, which we didn’t.

sudo usermod -a -G microk8s $USER

sudo chown -f -R $USER ~/.kube

su - $USERmicrok8s status --wait-readyoutput

microk8s is running

high-availability: no

datastore master nodes: 127.0.0.1:19001

datastore standby nodes: none

addons:

enabled:

dns # (core) CoreDNS

ha-cluster # (core) Configure high availability on the current node

helm # (core) Helm - the package manager for Kubernetes

helm3 # (core) Helm 3 - the package manager for Kubernetes

disabled:

cert-manager # (core) Cloud native certificate management

community # (core) The community addons repository

dashboard # (core) The Kubernetes dashboard

gpu # (core) Automatic enablement of Nvidia CUDA

host-access # (core) Allow Pods connecting to Host services smoothly

hostpath-storage # (core) Storage class; allocates storage from host directory

ingress # (core) Ingress controller for external access

kube-ovn # (core) An advanced network fabric for Kubernetes

mayastor # (core) OpenEBS MayaStor

metallb # (core) Loadbalancer for your Kubernetes cluster

metrics-server # (core) K8s Metrics Server for API access to service metrics

minio # (core) MinIO object storage

observability # (core) A lightweight observability stack for logs, traces and metrics

prometheus # (core) Prometheus operator for monitoring and logging

rbac # (core) Role-Based Access Control for authorisation

registry # (core) Private image registry exposed on localhost:32000

storage # (core) Alias to hostpath-storage add-on, deprecatedOptional - disabling ha-cluster

Personally I think if you don’t have a fast private network between your servers or you don’t have a fast storage shared between your servers then Microk8s high availability feature might not work quite well as expected. In my case CPU usage was about 20% always busy. Unfortunately this decision is not something you could change later in your deployment, switch between ha-cluster and none ha-cluster will reset your cluster to initial state(removes everything!). In this setup we are going to have 1 server 1 node, and we prefer less cpu usage, so we did use following command to disable it.

microk8s disable ha-cluster --forcemicrok8s kubectl get all -Aoutput

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

default service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 77sRe-enable dns addons

microk8s enable dnsIstio as ingress controller and network layer for Knative

In this guide we are going to use Istioctl to install Istio and we will have istio-system and istio-ingress namespaces separated for having better security.

First we need to copy Microk8s config to default place for current user

mkdir -p ~/.kube

microk8s config > ~/.kube/configThen add kubectl alias

alias kubectl='microk8s kubectl'~/.bashrc

Or install kubectl by following command (if you want to use krew plugins)

sudo snap install kubectl --classicThen

mkdir ~/etc

cd ~/etc

curl -L https://istio.io/downloadIstio | sh -

cd ~/etc/istio-1.17.2/

export PATH=$PWD/bin:$PATH

istioctl install --set profile=minimal -yCheck installation

kubectl get all -n istio-systemoutput

NAME READY STATUS RESTARTS AGE

pod/istiod-57c965889-pdpv5 1/1 Running 0 28m

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/istiod ClusterIP 10.152.183.139 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 28m

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/istiod 1/1 1 1 28m

NAME DESIRED CURRENT READY AGE

replicaset.apps/istiod-57c965889 1 1 1 28m

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/istiod Deployment/istiod <unknown>/80% 1 5 1 28mInstall istio-ingress with IstioOperator

istioctl operator initoutput

Installing operator controller in namespace: istio-operator using image: docker.io/istio/operator:1.17.2

Operator controller will watch namespaces: istio-system

✔ Istio operator installed

✔ Installation completeistio-ingress-operator.yaml

apiVersion: install.istio.io/v1alpha1

kind: IstioOperator

metadata:

name: ingress

namespace: istio-system

spec:

profile: empty # Do not install CRDs or the control plane

components:

ingressGateways:

- name: istio-ingressgateway

namespace: istio-ingress

enabled: true

label:

# Set a unique label for the gateway. This is required to ensure Gateways

# can select this workload

istio: ingressgateway

values:

gateways:

istio-ingressgateway:

# Enable gateway injection

injectionTemplate: gatewayistio-ingress-operator.yaml

kubectl create ns istio-ingress

kubectl apply -f istio-ingress-operator.yamlVerify is ready

kubectl get all -n istio-ingressoutput

NAME READY STATUS RESTARTS AGE

pod/istio-ingressgateway-77b5b78896-bwvjw 1/1 Running 0 4m48s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/istio-ingressgateway LoadBalancer 10.152.183.50 <pending> 15021:30688/TCP,80:30792/TCP,443:32182/TCP 4m48s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/istio-ingressgateway 1/1 1 1 4m48s

NAME DESIRED CURRENT READY AGE

replicaset.apps/istio-ingressgateway-77b5b78896 1 1 1 4m48s

NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

horizontalpodautoscaler.autoscaling/istio-ingressgateway Deployment/istio-ingressgateway <unknown>/80% 1 5 1 4m48sEnable metallb addons

microk8s enable metallb:10.0.0.5-10.0.0.250After successful installation, then your service must resolve external-ip from bare metal load balancer

kubectl get svc -n istio-ingressoutput

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

istio-ingressgateway LoadBalancer 10.152.183.50 10.0.0.5 15021:30688/TCP,80:30792/TCP,443:32182/TCP 17mBind istio-ingressgateway to host port 80 and 443

kubectl edit deploy istio-ingressgateway -n istio-ingressistio-ingressgateway

spec:

template:

spec:

- env:

ports:

- containerPort: 15021

protocol: TCP

- containerPort: 8080

hostPort: 80 # <- Add this line

protocol: TCP

- containerPort: 8443

hostPort: 443 # <- Add this line tooPlease note because of this binding, we can’t scale more than one pod for a node, so in our case we set replicas to 1. And for production environment with more thant one node, we could set replicas to count of nodes and with a logic that each node should have one istio-ingressgateway pod.

Enable cert-manager addons

microk8s enable cert-managerVerify is ready

kubectl get all -n cert-manageroutput

NAME READY STATUS RESTARTS AGE

pod/cert-manager-5d6bc46969-btqdd 1/1 Running 0 5m58s

pod/cert-manager-cainjector-7d8b8bb6b8-rjsx5 1/1 Running 0 5m58s

pod/cert-manager-webhook-5c5c5bb457-6zjcp 1/1 Running 0 5m58s

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

service/cert-manager ClusterIP 10.152.183.71 <none> 9402/TCP 5m58s

service/cert-manager-webhook ClusterIP 10.152.183.177 <none> 443/TCP 5m58s

NAME READY UP-TO-DATE AVAILABLE AGE

deployment.apps/cert-manager 1/1 1 1 5m58s

deployment.apps/cert-manager-cainjector 1/1 1 1 5m58s

deployment.apps/cert-manager-webhook 1/1 1 1 5m58s

NAME DESIRED CURRENT READY AGE

replicaset.apps/cert-manager-5d6bc46969 1 1 1 5m58s

replicaset.apps/cert-manager-cainjector-7d8b8bb6b8 1 1 1 5m58s

replicaset.apps/cert-manager-webhook-5c5c5bb457 1 1 1 5m58sZerossl ClusterIssuer

zerossl-cluster-issuer.yaml

apiVersion: v1

kind: Secret

metadata:

namespace: cert-manager

name: zerossl-eab

stringData:

secret: <CHANGE-TO-ACTUAL-SECRET>

---

apiVersion: v1

kind: Secret

metadata:

namespace: cert-manager

name: cloudflare-api-token

type: Opaque

stringData:

api-token: <CHANGE-TO-ACTUAL-CLOUDFLARE-API-TOKEN>

---

apiVersion: cert-manager.io/v1

kind: ClusterIssuer

metadata:

name: zerossl-prod

spec:

acme:

# The ACME server URL

server: https://acme.zerossl.com/v2/DV90

externalAccountBinding:

keyID: <CHANGE-TO-ACTUAL-KEY-ID>

keySecretRef:

name: zerossl-eab

key: secret

# Name of a secret used to store the ACME account private key

privateKeySecretRef:

name: zerossl-prod

solvers:

- dns01:

cloudflare:

apiTokenSecretRef:

name: cloudflare-api-token

key: api-tokenzerossl-cluster-issuer.yaml

kubectl apply -f zerossl-cluster-issuer.yamlVerify is ready

kubectl get ClusterIssueroutput

NAME READY AGE

zerossl-prod True 6sCertificate for istio-ingress namespace

certificate.yaml

apiVersion: cert-manager.io/v1

kind: Certificate

metadata:

generation: 1

name: star-example-com-tls

namespace: istio-ingress

spec:

dnsNames:

- "example.com"

- "*.example.com"

- "*.s.example.com"

- "*.default.s.example.com"

issuerRef:

group: cert-manager.io

kind: ClusterIssuer

name: zerossl-prod

secretName: star-example-com-tls

usages:

- digital signature

- key enciphermentcertificate.yaml

kubectl apply -f certificate.yamlVerify is ready

kubectl get Certificate -n istio-ingressoutput

NAME READY SECRET AGE

star-example-com-tls True star-example-com-tls 7m38sIstio IngressClass

istio-ingress-class.yaml

apiVersion: networking.k8s.io/v1

kind: IngressClass

metadata:

name: istio

spec:

controller: istio.io/ingress-controlleristio-ingress-class.yaml

kubectl apply -f istio-ingress-class.yamlVerify is ready

kubectl get IngressClassoutput

NAME CONTROLLER PARAMETERS AGE

istio istio.io/ingress-controller <none> 9sOptional - NFS Persistent Volumes

In continue we are going to use NFS persistent volumes, and this doc shows required steps and at the end you should be able to run following command and compare your output.

microk8s kubectl get storageclassoutput

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-csi (default) nfs.csi.k8s.io Delete Immediate true 72sIf your StorageClass is not mark as default, you could use following command.

microk8s kubectl patch storageclass nfs-csi -p '{"metadata": {"annotations":{"storageclass.kubernetes.io/is-default-class":"true"}}}'Optional - Enable registry addons

output

microk8s enable registryRegistry ingress

registry-ingress.yaml

apiVersion: networking.k8s.io/v1

kind: Ingress

metadata:

name: registry

namespace: container-registry

annotations:

kubernetes.io/ingress.class: istio

cert-manager.io/cluster-issuer: "zerossl-prod"

spec:

rules:

- host: reg.example.com

http:

paths:

- path: /

pathType: Prefix

backend:

service:

name: registry

port:

number: 5000

tls:

- hosts:

- reg.example.com

secretName: star-example-com-tlsregistry-ingress.yaml

kubectl apply -f registry-ingress.yamlVerify is ready

kubectl get ingress -n container-registryoutput

NAME CLASS HOSTS ADDRESS PORTS AGE

registry <none> reg.example.com 80, 443 5m39sAt this point you should be able to access your server from outside over https protocol

curl https://reg.example.com/v2/output

{}Knative-serving installation

We are going to install Knative by using Knative Operator and latest version at the time is 1.10.1.

kubectl apply -f https://github.com/knative/operator/releases/download/knative-v1.10.1/operator.yamlVerify is ready

kubectl get deployment knative-operator -n defaultoutput

NAME READY UP-TO-DATE AVAILABLE AGE

knative-operator 1/1 1 1 12mknative-serving.yaml

apiVersion: v1

kind: Namespace

metadata:

name: knative-serving

---

apiVersion: operator.knative.dev/v1beta1

kind: KnativeServing

metadata:

name: knative-serving

namespace: knative-serving

spec:

version: "1.10"

ingress:

istio:

enabled: true

config:

domain:

"s.example.com": ""

network:

auto-tls: "Enabled"

autocreate-cluster-domain-claims: "true"

namespace-wildcard-cert-selector: '{"matchExpressions": [{"key":"networking.knative.dev/enableWildcardCert", "operator": "In", "values":["true"]}]}'

istio:

gateway.knative-serving.knative-ingress-gateway: "istio-ingressgateway.istio-ingress.svc.cluster.local"

local-gateway.knative-serving.knative-local-gateway: "knative-local-gateway.istio-ingress.svc.cluster.local"

certmanager:

issuerRef: |

kind: ClusterIssuer

name: zerossl-prodknative-serving.yaml

kubectl apply -f knative-serving.yamlVerify is ready

kubectl get KnativeServing knative-serving -n knative-servingoutput

NAME VERSION READY REASON

knative-serving 1.10.1 TrueKnative istio integration

kubectl apply -f https://github.com/knative/net-istio/releases/download/knative-v1.10.0/net-istio.yamlknative-peer-authentication.yaml

apiVersion: v1

apiVersion: "security.istio.io/v1beta1"

kind: "PeerAuthentication"

metadata:

name: "default"

namespace: "knative-serving"

spec:

mtls:

mode: PERMISSIVEknative-peer-authentication.yaml

kubectl apply -f knative-peer-authentication.yamlKnative cert-manager integration

kubectl apply -f https://github.com/knative/net-certmanager/releases/download/knative-v1.10.0/release.yamlVerify is ready

kubectl get deployment net-certmanager-controller -n knative-servingoutput

NAME READY UP-TO-DATE AVAILABLE AGE

net-certmanager-controller 1/1 1 1 95sEnable wildcard Certificate for default namespace

kubectl label ns default networking.knative.dev/enableWildcardCert=trueEnsure configmaps updated successfully

kubectl get configmap config-istio -n knative-serving -o yaml

kubectl get configmap config-domain -n knative-serving -o yaml

kubectl get configmap config-network -n knative-serving -o yaml

kubectl get configmap config-certmanager -n knative-serving -o yamlHelloWorld service for test serving

hello-service.yaml

apiVersion: serving.knative.dev/v1

kind: Service

metadata:

name: helloworld-go

namespace: default

spec:

template:

spec:

containers:

- image: ghcr.io/knative/helloworld-go:latest

env:

- name: TARGET

value: "Go Sample v1"hello-service.yaml

kubectl apply -f hello-service.yamlVerify is ready

kctl get ksvc -n defaultoutput

NAME URL LATESTCREATED LATESTREADY READY REASON

helloworld-go https://helloworld-go.default.s.example.com helloworld-go-00001 helloworld-go-00001 TrueFinally test helloworld service to be accessible from outside

curl https://helloworld-go.default.s.example.comoutput

Hello Go Sample v1!Config custom domain for helloworld-go servcie

hello-domain-mapping.yaml

apiVersion: serving.knative.dev/v1alpha1

kind: DomainMapping

metadata:

name: hello.example.com

namespace: default

spec:

ref:

name: helloworld-go

kind: Service

apiVersion: serving.knative.dev/v1hello-domain-mapping.yaml

kubectl apply -f hello-domain-mapping.yamlVerify is ready

kctl get domainmapping -n defaultoutput

NAME URL READY REASON

hello.example.com https://hello.example.com Truecurl https://hello.example.comoutput

Hello Go Sample v1!That’s all, in this post we did deploy a Knative service and make it securely available to our customers 🍻.

kubectl get all -Aoutput

NAMESPACE NAME READY STATUS RESTARTS AGE

cert-manager pod/cert-manager-5d6bc46969-btqdd 1/1 Running 1 (5h51m ago) 24h

cert-manager pod/cert-manager-cainjector-7d8b8bb6b8-rjsx5 1/1 Running 1 (5h50m ago) 24h

cert-manager pod/cert-manager-webhook-5c5c5bb457-6zjcp 1/1 Running 0 24h

container-registry pod/registry-9865b655c-ftngg 1/1 Running 0 23h

default pod/knative-operator-7b7d4bbc7d-pj48l 1/1 Running 0 22h

default pod/operator-webhook-74d9489bf8-7vdhr 1/1 Running 0 22h

istio-ingress pod/istio-ingressgateway-7f5958c7d9-l2qfc 1/1 Running 0 24h

istio-operator pod/istio-operator-79d6df8f9d-m9zkw 1/1 Running 3 (5h51m ago) 25h

istio-system pod/istiod-57c965889-pdpv5 1/1 Running 0 29h

knative-serving pod/activator-658c5747b-zzvjm 1/1 Running 0 21h

knative-serving pod/autoscaler-f989fbf86-fzszz 1/1 Running 0 21h

knative-serving pod/autoscaler-hpa-5d7668d747-gs6zs 1/1 Running 0 21h

knative-serving pod/controller-64b9dcc975-h4vnq 1/1 Running 0 21h

knative-serving pod/domain-mapping-7b87f895b6-zxbkk 1/1 Running 0 21h

knative-serving pod/domainmapping-webhook-54cddcb594-xxmgl 1/1 Running 0 21h

knative-serving pod/net-certmanager-controller-575898d58-bv6x5 1/1 Running 0 20h

knative-serving pod/net-certmanager-webhook-7cd899c855-pps7t 1/1 Running 0 20h

knative-serving pod/net-istio-controller-84cb8b59fb-dxvgq 1/1 Running 0 21h

knative-serving pod/net-istio-webhook-8d785b78d-jwqqf 1/1 Running 0 21h

knative-serving pod/webhook-7698bcf68f-qxd7f 1/1 Running 0 21h

kube-system pod/coredns-7745f9f87f-lswgs 1/1 Running 2 (28h ago) 47h

kube-system pod/csi-nfs-controller-6f844cdc89-slhdt 3/3 Running 12 (5h51m ago) 30h

kube-system pod/csi-nfs-node-r6h7c 3/3 Running 6 (28h ago) 30h

kube-system pod/hostpath-provisioner-58694c9f4b-ztmgq 1/1 Running 3 (5h51m ago) 23h

metallb-system pod/controller-8467d88d69-sqlsf 1/1 Running 0 25h

metallb-system pod/speaker-j4b5r 1/1 Running 0 25h

NAMESPACE NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

cert-manager service/cert-manager ClusterIP 10.152.183.71 <none> 9402/TCP 24h

cert-manager service/cert-manager-webhook ClusterIP 10.152.183.177 <none> 443/TCP 24h

container-registry service/registry NodePort 10.152.183.166 <none> 5000:32000/TCP 23h

default service/helloworld-go ExternalName <none> knative-local-gateway.istio-ingress.svc.cluster.local 80/TCP 19h

default service/helloworld-go-00001 ClusterIP 10.152.183.45 <none> 80/TCP,443/TCP 19h

default service/helloworld-go-00001-private ClusterIP 10.152.183.68 <none> 80/TCP,443/TCP,9090/TCP,9091/TCP,8022/TCP,8012/TCP 19h

default service/kubernetes ClusterIP 10.152.183.1 <none> 443/TCP 47h

default service/operator-webhook ClusterIP 10.152.183.107 <none> 9090/TCP,8008/TCP,443/TCP 22h

istio-ingress service/istio-ingressgateway LoadBalancer 10.152.183.50 10.0.0.5 15021:30688/TCP,80:30792/TCP,443:32182/TCP 25h

istio-ingress service/knative-local-gateway ClusterIP 10.152.183.228 <none> 80/TCP 21h

istio-operator service/istio-operator ClusterIP 10.152.183.132 <none> 8383/TCP 25h

istio-system service/istiod ClusterIP 10.152.183.139 <none> 15010/TCP,15012/TCP,443/TCP,15014/TCP 29h

istio-system service/knative-local-gateway ClusterIP 10.152.183.216 <none> 80/TCP 21h

knative-serving service/activator-service ClusterIP 10.152.183.19 <none> 9090/TCP,8008/TCP,80/TCP,81/TCP,443/TCP 21h

knative-serving service/autoscaler ClusterIP 10.152.183.49 <none> 9090/TCP,8008/TCP,8080/TCP 21h

knative-serving service/autoscaler-bucket-00-of-01 ClusterIP 10.152.183.147 <none> 8080/TCP 21h

knative-serving service/autoscaler-hpa ClusterIP 10.152.183.156 <none> 9090/TCP,8008/TCP 21h

knative-serving service/controller ClusterIP 10.152.183.35 <none> 9090/TCP,8008/TCP 21h

knative-serving service/domainmapping-webhook ClusterIP 10.152.183.238 <none> 9090/TCP,8008/TCP,443/TCP 21h

knative-serving service/net-certmanager-controller ClusterIP 10.152.183.104 <none> 9090/TCP,8008/TCP 20h

knative-serving service/net-certmanager-webhook ClusterIP 10.152.183.227 <none> 9090/TCP,8008/TCP,443/TCP 20h

knative-serving service/net-istio-webhook ClusterIP 10.152.183.138 <none> 9090/TCP,8008/TCP,443/TCP 21h

knative-serving service/webhook ClusterIP 10.152.183.150 <none> 9090/TCP,8008/TCP,443/TCP 21h

kube-system service/kube-dns ClusterIP 10.152.183.10 <none> 53/UDP,53/TCP,9153/TCP 47h

metallb-system service/webhook-service ClusterIP 10.152.183.163 <none> 443/TCP 25h

NAMESPACE NAME DESIRED CURRENT READY UP-TO-DATE AVAILABLE NODE SELECTOR AGE

kube-system daemonset.apps/csi-nfs-node 1 1 1 1 1 kubernetes.io/os=linux 30h

metallb-system daemonset.apps/speaker 1 1 1 1 1 kubernetes.io/os=linux 25h

NAMESPACE NAME READY UP-TO-DATE AVAILABLE AGE

cert-manager deployment.apps/cert-manager 1/1 1 1 24h

cert-manager deployment.apps/cert-manager-cainjector 1/1 1 1 24h

cert-manager deployment.apps/cert-manager-webhook 1/1 1 1 24h

container-registry deployment.apps/registry 1/1 1 1 23h

default deployment.apps/helloworld-go-00001-deployment 0/0 0 0 19h

default deployment.apps/knative-operator 1/1 1 1 22h

default deployment.apps/operator-webhook 1/1 1 1 22h

istio-ingress deployment.apps/istio-ingressgateway 1/1 1 1 25h

istio-operator deployment.apps/istio-operator 1/1 1 1 25h

istio-system deployment.apps/istiod 1/1 1 1 29h

knative-serving deployment.apps/activator 1/1 1 1 21h

knative-serving deployment.apps/autoscaler 1/1 1 1 21h

knative-serving deployment.apps/autoscaler-hpa 1/1 1 1 21h

knative-serving deployment.apps/controller 1/1 1 1 21h

knative-serving deployment.apps/domain-mapping 1/1 1 1 21h

knative-serving deployment.apps/domainmapping-webhook 1/1 1 1 21h

knative-serving deployment.apps/net-certmanager-controller 1/1 1 1 20h

knative-serving deployment.apps/net-certmanager-webhook 1/1 1 1 20h

knative-serving deployment.apps/net-istio-controller 1/1 1 1 21h

knative-serving deployment.apps/net-istio-webhook 1/1 1 1 21h

knative-serving deployment.apps/webhook 1/1 1 1 21h

kube-system deployment.apps/coredns 1/1 1 1 47h

kube-system deployment.apps/csi-nfs-controller 1/1 1 1 30h

kube-system deployment.apps/hostpath-provisioner 1/1 1 1 23h

metallb-system deployment.apps/controller 1/1 1 1 25h

NAMESPACE NAME DESIRED CURRENT READY AGE

cert-manager replicaset.apps/cert-manager-5d6bc46969 1 1 1 24h

cert-manager replicaset.apps/cert-manager-cainjector-7d8b8bb6b8 1 1 1 24h

cert-manager replicaset.apps/cert-manager-webhook-5c5c5bb457 1 1 1 24h

container-registry replicaset.apps/registry-9865b655c 1 1 1 23h

default replicaset.apps/helloworld-go-00001-deployment-6466c46f55 0 0 0 19h

default replicaset.apps/knative-operator-7b7d4bbc7d 1 1 1 22h

default replicaset.apps/operator-webhook-74d9489bf8 1 1 1 22h

istio-ingress replicaset.apps/istio-ingressgateway-77b5b78896 0 0 0 25h

istio-ingress replicaset.apps/istio-ingressgateway-7f5958c7d9 1 1 1 25h

istio-operator replicaset.apps/istio-operator-79d6df8f9d 1 1 1 25h

istio-system replicaset.apps/istiod-57c965889 1 1 1 29h

knative-serving replicaset.apps/activator-658c5747b 1 1 1 21h

knative-serving replicaset.apps/autoscaler-f989fbf86 1 1 1 21h

knative-serving replicaset.apps/autoscaler-hpa-5d7668d747 1 1 1 21h

knative-serving replicaset.apps/controller-64b9dcc975 1 1 1 21h

knative-serving replicaset.apps/domain-mapping-7b87f895b6 1 1 1 21h

knative-serving replicaset.apps/domainmapping-webhook-54cddcb594 1 1 1 21h

knative-serving replicaset.apps/net-certmanager-controller-575898d58 1 1 1 20h

knative-serving replicaset.apps/net-certmanager-webhook-7cd899c855 1 1 1 20h

knative-serving replicaset.apps/net-istio-controller-84cb8b59fb 1 1 1 21h

knative-serving replicaset.apps/net-istio-webhook-8d785b78d 1 1 1 21h

knative-serving replicaset.apps/webhook-7698bcf68f 1 1 1 21h

kube-system replicaset.apps/coredns-7745f9f87f 1 1 1 47h

kube-system replicaset.apps/csi-nfs-controller-6f844cdc89 1 1 1 30h

kube-system replicaset.apps/hostpath-provisioner-58694c9f4b 1 1 1 23h

metallb-system replicaset.apps/controller-8467d88d69 1 1 1 25h

NAMESPACE NAME REFERENCE TARGETS MINPODS MAXPODS REPLICAS AGE

istio-ingress horizontalpodautoscaler.autoscaling/istio-ingressgateway Deployment/istio-ingressgateway <unknown>/80% 1 5 1 25h

istio-system horizontalpodautoscaler.autoscaling/istiod Deployment/istiod <unknown>/80% 1 5 1 29h

knative-serving horizontalpodautoscaler.autoscaling/activator Deployment/activator <unknown>/100% 1 20 1 21h

knative-serving horizontalpodautoscaler.autoscaling/webhook Deployment/webhook <unknown>/100% 1 5 1 21h

NAMESPACE NAME LATESTCREATED LATESTREADY READY REASON

default configuration.serving.knative.dev/helloworld-go helloworld-go-00001 helloworld-go-00001 True

NAMESPACE NAME CONFIG NAME K8S SERVICE NAME GENERATION READY REASON ACTUAL REPLICAS DESIRED REPLICAS

default revision.serving.knative.dev/helloworld-go-00001 helloworld-go 1 True 0 0

NAMESPACE NAME URL READY REASON

default route.serving.knative.dev/helloworld-go https://helloworld-go.default.s.taakcloud.com True

NAMESPACE NAME URL LATESTCREATED LATESTREADY READY REASON

default service.serving.knative.dev/helloworld-go https://helloworld-go.default.s.taakcloud.com helloworld-go-00001 helloworld-go-00001 True

NAMESPACE NAME URL READY REASON

default domainmapping.serving.knative.dev/hello.taakcloud.com https://hello.taakcloud.com True